Google's BigQuery - Part - 2

BigQuery is Google’s fully

managed, petabyte scale, low cost analytics data warehouse for business insights.

BigQuery Analyze petabytes of data using ANSI SQL at blazing-fast speeds, with zero

operational overhead that is No-Ops

service (no infrastructure to manage and no need a database administrator)

under secured governance and encrypted environment.

- Integration with popular visualization tools

- Flexible pricing model

- Pay only for what you use

- Flat-tier-pricing for dedicated use

- No pay for automatic cached query results

- Support of Geo-spatial data types & functions and Foundation for Business Intelligence and AI/ML.

- I would strongly suggest to read the blog "Introduction of BigQuery" to have a context.

Load job Limitations :

- 1,000 import jobs per table per day

- 10,000 import jobs per project per day

- File Size (for both CSV and JSON)

- 1 GB for compressed file

- 1 TB for uncompressed

- 4GB for uncompressed CSV with newlines in strings

- 10,000 files per import job

- 1 TB per import job

Recommendations:

- CSV/JSON must be split into chunks less than 1 TB

- Split to smaller files to smaller data unit (day, month)

- Easier error recovery

- Split Tables by Dates

- Minimize cost of data scanned

- Minimize query time

- Upload multiple files to Cloud Storage

- Allow parallel upload into BigQuery

- Denormalize your data

Federated BigQuery:

Federated

BigQuery

(bq) to

access direct sources without loading data into storage and querying them. Querying external different data sources without any issues –

Currently

supported data sources -

- Cloud Storage (CSV, Parquet, JSON, Avro, Orc etc…)

- Cloud SQL (mySQL, SQL Server, Postgres)

- Google Drive (Google Sheets)

- BigTable

**BQ doesn’t cache the result because it is external database and it needs authentication, network connection.

Schema Design in BigQuery:

Special schema design makes BigQuery lighting fast. Before going into details, let's see, "how is data stored into transaction database?".

OLTP (Online Transactional Processing) system keeps data into normalized form for its original form.

In OLTP database designing -

Master and child tables are created. Relationships are defined with primary and foreign keys. Mapping tables are created & finally DB design tries to apply all forms of normalization from 1NF to 6NF depending upon the use case of your business need.

When data is loaded into data warehouse, it needs to be denormalized to get high throughput performance. Denormalize data before loading into data warehouse as mentioned below-

Scheme Design in BigQuery

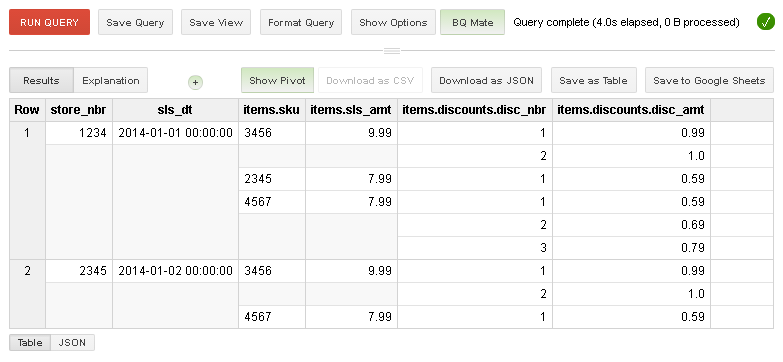

BigQuery supports simple data types such as integers, as well as more complex data types such as ARRAY and STRUCT. Nested and repeated support of complex data types give high efficiency to BigQuery. Data is stored into BigQuery in de-normalized form with nested & repeated column.

Just focus on the right side image, there are only 2 rows and order.Product.Name contains repeated values. This is because of Struct repeatable data type. What is struct data type?

STRUCT Data type:

- STRUCTS is logical container and type is RECORD.

- It contains period in the name of the column like Address.city

- Arrays can be part of regular fields or STRUCTS

- It can have mode ‘REPEATED’ or not.

- A single table can have many STRUCTS.

Limitation - When you load nested and repeated data, your schema cannot contain more than 15 levels of nested STRUCTs (RECORD types).

Example data in BigQuery table with repeated columns -

Partitioning tables help to improve query performance and cost optimization. Google BigQuery is columnar store, only charges for the columns that are read when a query is run. Partitioning allows to slice billing rows for the intersection of column queried and partitions used.

- A partition table is a special table that is divided into segments, called partitions.

- Easier to manage and query the data

- BigQuery stores the data in different shards based on date, datetime or timestamp column and ensures that all data in a block belongs to a single partition.

- Maintains more metadata than non-partitioned.

- Dividing a large table into smaller partitions improves query performance (reduction in query time).

- Costs by reducing the number of bytes read by a query.

- Expiration of partition table can be set.

Partition BigQuery tables by -

Ingestion Time (load column),

Date/Timestamp (on date/timestamp column)

Integer range (on integer column).

Ingestion Time (load column),

Date/Timestamp (on date/timestamp column)

Integer range (on integer column).

The syntax to query these partitions used to be the following:

SELECT c1, c3 FROM ...

WHERE _PARTITIONTIME BETWEEN "2020-02-06" AND "2020-06-05"

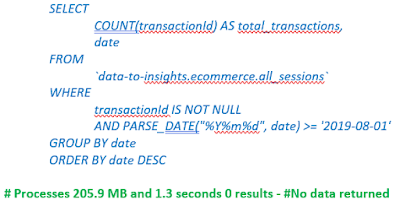

Data into BigQuery table is partitioned as:

This indicates if partitioned table doesn't contain data for August 2019 and you apply filter condition, query engine will tell you zero result would be returned so that you don't need to run query and avoid incurring any cost of the query.

In the above example you can notice that processed data is 205.9 MB but zero result is returned and good part is that BigQuery's query editor displays this message during parsing of the query that is as you are finished with writing of the query, this message will immediately be displayed at right bottom corner of the query editor. Hence no need to run the query.

- Clustered table data is automatically organized based on the contents of one or more columns in the table’s schema.

- When you cluster a table using multiple columns, the order of columns you specify is important. The order of the specified columns determines the sort order of the data.

- Clustering can improve performance of those queries which use filter clauses and those aggregates data.

- When data is written to a clustered table by a query job or a load job, BigQuery sorts the data using the values in the clustering columns.

When to use clustering

BigQuery supports clustering for both partitioned and non-partitioned tables.

- You need more granularity than partitioning alone allows. To get clustering benefits in addition to partitioning benefits, you can use the same column for both partitioning and clustering.

- Your queries commonly use filters or aggregation against multiple particular columns.

Setup clustering at Table Creation:

This is the following statement which you can use while creating a table where you can define partition and clustering.

- In streaming tables, the sorting fails over the time and so BigQuery has to re-cluster.

- It is free, maintenance-free and autonomous.

- Force re-clustering using DML statement

Clustering Benefits:

The following snapshot clearly shows the benefits of clustering in BigQuery. Notice the left hand side query which was run on non-clustered table and see that query took 20.7 secs and processed 2.2 TB whereas when same query was run on the clustered table, its query time was reduced by 4 times that is to 5.4 sec and 227.76 GB processed data. You can see how it has been optimized and reduced cost drastically.

Thanks for reading, please share comments.

References:

https://cloud.google.com/bigquery/what-is-bigquery

https://cloud.google.com/bigquery

https://medium.com/google-cloud/bigquery-optimized-cluster-your-tables-65e2f684594b

Very interesting. Thanks. It looks like very promising technology. I'm worried about the price. I believe that event with many cost optimization, it will be more expensive to have our data in Google's cloud services than having our own on-premises server; I mean, if they charge every time I need to make a query, maybe I won't want to query my own info!

ReplyDeleteWith the ever-increasing volume and variety of data, thedata lakeis becoming increasingly important for organizations. Organizations are no longer able to store and process all data in the same way. The data lake is the storehouse for all of the data in the enterprise, regardless of where it is stored. For instance, transactional data is stored in the data lake, but big data is also stored

ReplyDelete